PoseiqGPT?

Dear R Dr Broyde (Cc: Avodah),

Over Shabbos, I read your article about getting ChatGPT to discuss whether a kohein in a same-sex marriage may go up to duchen.

I don’t know the strength of your background in Large Language Models in general or GPTs in particular. I am now researching their use at my job. Here is my impressions as a programmer who has put in a couple of months writing clients for a GPT.

Hopefully, much of it is material you already know, and hopeful my attempt to fill in information I didn’t see in your essay won’t come across as talking down to you.

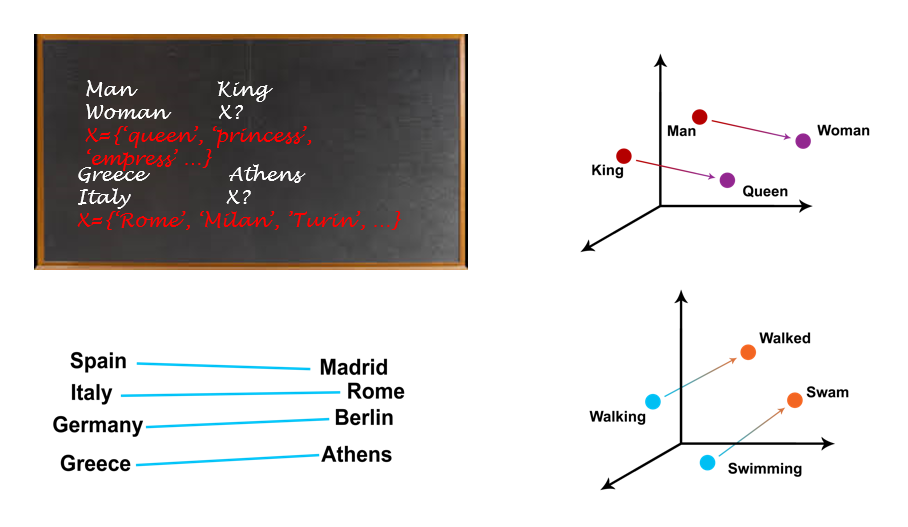

First, a large language model isn’t an AI in the sense of having a good representation of what it is talking about. Words are assigned strings of numbers, vectors, that do correlate to the word’s usage. So that king minus male will bring you to a similar vector as queen minus female. And GPT has a system for using context to distinguish the uses of the word “flies” in

Time flies like an arrow, but

fruit flies like bananas.

Still, it doesn’t so much reason as much as copy patterns, meta-patterns, meta- meta-patterns, etc… that match the texts it was trained on. Kind of like a mega-advanced version of Google’s search window’s ability to guess the rest of your sentence.

It is incredible that it can simulate intelligence by modeling language.

There is something here about Unqelus’s “ruach memalela” (defining the soul Hashem breathed into Adam as a “speaking spirit”, or all the Rishonim who classify the human soul as “medaber” (that of a “speaker”; or the Greek Philosophers who also did so), but I am not sure what that something is.

In any case, I would say that a LLM simulates intelligence, and calling it SI would be more honest. (If harder to fund.) The kind of AI where you would have to wonder if you should say “that” or “whom” is now re-branded Artificial General Intelligence (AGI). Me, I think “SI” vs “AI” would be more honest.

In that a LLM isn’t modeling concepts, it is doing something else which results in something we think of as intelligent. And it certainly cannot stay up in the dorm room at night discussing how one knows whether its mental image of red is my mental image of blue, we just use the same word “red” because we are looking at the same thing. And we don’t even know how much of SI may just be the same pareidolia tendency that turns two headlights and a bumper that curves up at the ends into a face.

“Training” here means tuning the constants in billions of equations until the output succeeds in “predicting” the training set. (Because GPTs are self-training. Some LLMs are trained using a test that an output could pass or fail.)

So that’s the first input to a GPT — the training data it came with. And for ChatGPT, that is the Common Crawl data set, is a publicly available collection of billions of web pages pre-chewed into an easy-to-use format by an educational non-profit (a 501(c)3 charity).

The second possible data set is a database prepared like the training data, but after it was trained. For example, at the hedge fund I am working for, there are people trying to do this with broker reports and analyses so that a chatbot can answer questions about how various companies and industries are doing.

For this you need the programmer interface, not the web dialog page most people are using ChatGPT with.

This material isn’t stored on the GPT itself. It’s your database, that it is searching repeatedly. Kind of like giving a human being a library, if they could read really really quickly.

And it doesn’t have a token limit.

Third is what you did, telling it things in prompts. This information isn’t stored at all. In fact, when you continue a conversation, the web page is sending your new text after sending the entire discussion so far. So that each response requires the entirety as its input; it isn’t even saved in the GPT for the length of the discussion!

In terms of making an AI poseiq…

First I would look at issues like the pro-forma requirements of a poseiq.

If a non-Jew cannot be a poseiq, can a LLM? Discussions of women as posqos would be very related.

But what if it advises without being formal pesaq? Like R Henkin’s model for Yoatzot? (Assuming I understood him when he joined the discussion on Avodah.) Such as requiring a poseiq to vet the results (Quality Assurance) and it is they who are taking responsibility for the decision.

Second, I would look at posqim who take Siyata diShmaya (heavenly assistance) as a part of pesaq seriously. Those who take theoretical questions as less authoritative than practial, lemaaseh, ones. One could say a GPT doesn’t get such Siyata diShmaya, or one could say that given its indeterminism (if the “temperature” setting is above 0.0) it could be an ideal vehicle for such siyata!

Third, I would look at the three ways of giving a GPT information:

Information given in the prompts or even in an external database won’t change how it puts one word after the other. You are just giving it a “what”. The “how” is only during training.

So, if we wanted to feed Bar Ilan into a GPT in a database, it would still be emulating someone who doesn’t think like a poseiq but does have access to a database.

If we want to simulate someone who was meshameish talmidei chakhamim (apprenticed under accomplished rabbis) the way the gemara requires of a rabbi, we would need to train a GPT from scratch on teshuvos and some sefarim of lomdus and sevara. (PoseiqGPT would have more Bar Ilan and Otzar, and less Wikipedia.)

But I still think one would need a Jewish adult, who has the requisite knowing-how-to-think (and not just a simulation) to review and approve the results for it to be a real “pesaq“. And IMHO, a male one.

Recent Comments